In the world of web development, the ability to automate deployment processes, massively increases efficiency. GitHub workflows provides a powerful, flexible platform for automating workflows, including Continuous Integration (CI) and Continuous Deployment (CD). In this article, I’ll show you how to automate the deployment of your website to an AWS S3 bucket every time you commit and push changes to your GitHub repository.

Things to know before we start

For the purpose of this article, I’m presuming that you already have an AWS S3 bucket (they have a great free tier, if you want to set one up), have setup a IAM user / key, with the right permissions and a GitHub repo (also has a great free tier).

How to setup a GitHub workflow to upload to an S3 bucket on commit and push

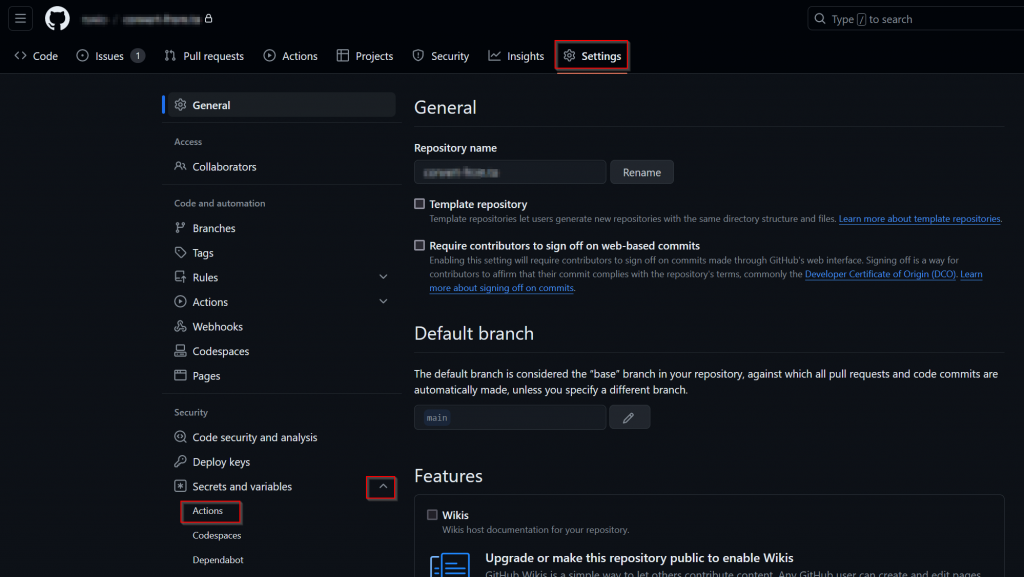

1) Navigate to your repository, select ‘Settings’, expand ‘Secrets and variables’ and then select ‘Actions’

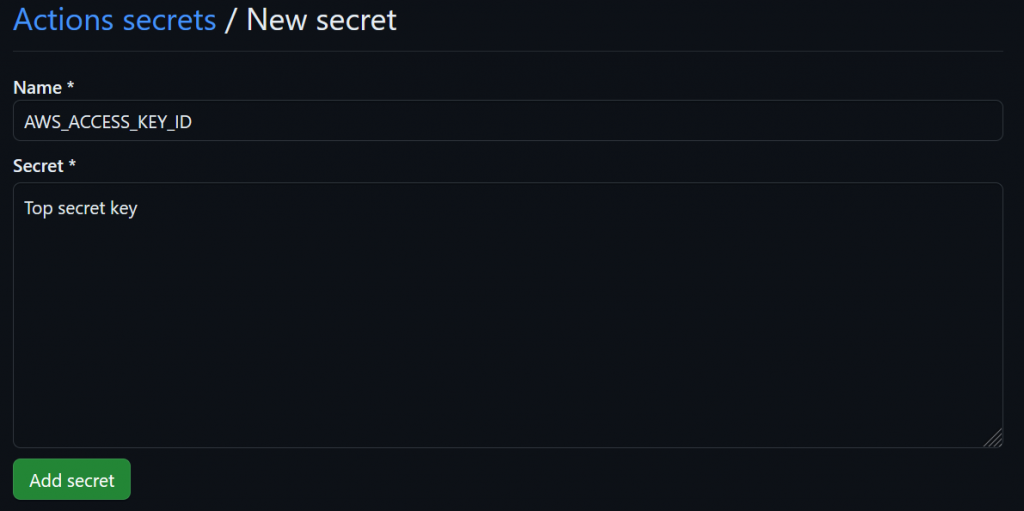

2) Click ‘New repository secret’

3) Add one for each of the below:

AWS_ACCESS_KEY_ID: The access key for your IAM userAWS_SECRET_ACCESS_KEY: The secret access key for your IAM userAWS_S3_BUCKET: The name of your S3 bucket

It’s important to never store any credentials in your yaml files, or any file in your repo, as it could result in a security breach. So instead, utilising secrets keeps your credentials safe. If you have stored any credentials in your code, change them ASAP and use secrets instead.

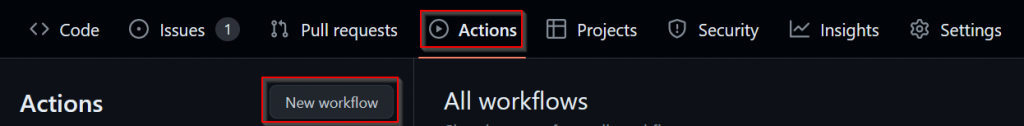

4) Select ‘Actions’, and then ‘New workflow’

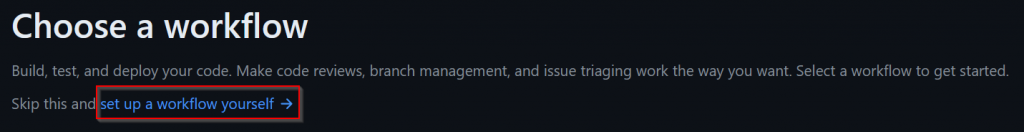

5) Select ‘set up a workflow yourself’

You can set the filename to whatever you want, even leaving it as ‘main.yml’ if you want, or perhaps name it something more descriptive, such as ”upload-to-s3.yml’.

6) Enter the code, as below, but make sure you change the ‘aws-region’ and the branch, if you don’t want to use ‘main’, and you’ll notice I’ve included a few paths to exclude, you may want to exclude more:

name: Upload Website

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v1

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: eu-west-2

- name: Deploy website to S3 bucket

run: aws s3 sync ./ s3://${{ secrets.AWS_S3_BUCKET }} --delete --exclude '.git/*' --exclude '.github/*' --exclude '.gitignore' GitHub to AWS S3 bucket YAML explained

Workflow name and trigger

name: Upload Website

on:

push:

branches:

- mainname: Specifies the name of the workflow, visible on your GitHub repository actions page.on: Defines the event that triggers the workflow. Here, it is set to activate onpushevents specifically to themainbranch, ensuring the workflow runs only for updates to the primary branch of the repository.

Job configuration

jobs:

deploy:

runs-on: ubuntu-latestjobs: Contains a collection of jobs that the workflow will execute. This YAML defines one job nameddeploy.runs-on: Specifies the type of virtual host machine to run the job on. Here, it uses the latest version of Ubuntu, providing a stable and updated environment for the deployment process.

Tasks to execute

steps:

- name: Checkout

uses: actions/checkout@v1steps: A sequence of tasks that are executed as part of the job.name: Checkout: Names the first step, which involves checking out your repository to the GitHub runner. This action is crucial for accessing the repository content that needs to be deployed.uses: actions/checkout@v1: This action checks out a copy of your repository into the GitHub Actions runner, allowing subsequent steps to access the repository content.

Configure AWS Credentials

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: eu-west-2name: Configure AWS Credentials: Names the step responsible for setting up AWS credentials in the GitHub Actions environment.uses: aws-actions/configure-aws-credentials@v1: This GitHub Action configures AWS credentials for use in other actions. It securely sets up the AWS environment for the deployment step.with: Specifies the AWS credentials and region as inputs to the action. These are fetched from GitHub Secrets to ensure security (AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY). Theaws-regionis explicitly set toeu-west-2(London), aligning with where the S3 bucket is hosted.

Deploy Static Site to S3 Bucket

- name: Deploy website to S3 bucket

run: aws s3 sync ./ s3://${{ secrets.AWS_S3_BUCKET }} --delete --exclude '.git/*' --exclude '.github/*' --exclude '.gitignore'name: Deploy website to S3 bucket: This step carries out the deployment of your website to the designated S3 bucket.run: Executes the AWS CLI command to sync the contents of the current directory (your project folder) to the specified S3 bucket. The command includes options to:--delete: Remove files in the destination that are not present in the source, keeping the bucket up-to-date with the latest build.--exclude: Ignore certain directories and files (.git,.github,.gitignore), ensuring that only relevant files are uploaded to the S3 bucket.

This YAML configuration effectively automates the process of deploying updates to a static website to AWS S3, making website management simpler and more reliable.

Test your GitHub to AWS S3 workflow

Now, as you’ve probably already guessed, testing is going to be easy. Just make a code change, commit and push it to your GitHub repo.

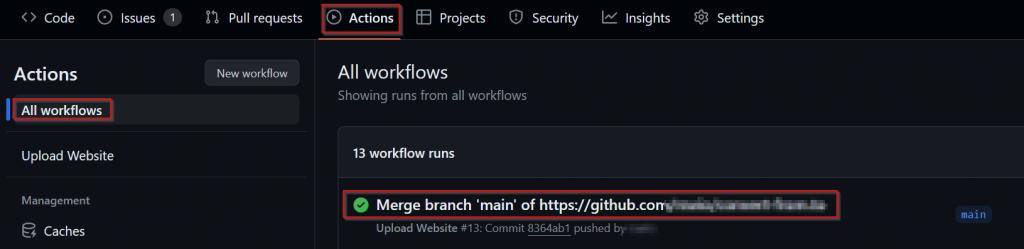

Then, go to the repo, then ‘Actions’, and you should be in the ‘All workflows’ section by default. You should see a run of your workflow in there, but it make take a minute or two and if you see it running, just be patient. When complete, it should look like the below, with a nice green tick next to it.

If you don’t have a successful first run, select the workflow and debug it using the information provided.

Security and best practices

When automating deployment workflows, especially when they involve sensitive operations like AWS configurations, maintaining security and adhering to best practices is paramount. Below are tailored guidelines based on the latest GitHub Actions YAML file provided:

1) Strong passwords and Multi-Factor Authentication (MFA): Always make sure you use strong passwords and enable MFA.

2) Use minimal IAM permissions: Although we didn’t cover the S3 bucket setup, it’s important to ensure that the IAM user whose credentials are used in the workflow has the minimum necessary permissions. This should be limited to only the actions required to deploy to the S3 bucket, such as s3:PutObject, s3:GetObject, and s3:DeleteObject.

3) Secure your secrets: Always store sensitive information such as AWS access keys and S3 bucket names within GitHub secrets. This prevents exposure of sensitive data in your repository and allows GitHub to handle these secrets securely.

4) Regularly rotate credentials: As a security best practice, regularly rotate your AWS access keys and update these in the GitHub Secrets to limit risks associated with key exposure.

5) Monitor AWS access logs: Keep an eye on AWS CloudTrail logs to monitor the actions performed by the credentials used. This helps in early detection of any unauthorised access or anomalous activities.

6) Specify resource-level permissions: When setting up the IAM policies, explicitly define the resources that the credentials should have access to, such as specifying the exact S3 bucket name in the policy.

7) Exclude sensitive files: The workflow excludes specific files and directories (like .git, .github, and .gitignore) from being uploaded to the S3 bucket. Ensure that no sensitive configuration files or environment specific files are inadvertently included in the sync.

Conclusion

Automating the deployment of your website to an AWS S3 bucket using a GitHub workflow streamlines your development and deployment processes, ensuring your web content is consistently updated with minimal manual intervention. By leveraging GitHub’s integration with AWS through the use of actions such as actions/checkout and aws-actions/configure-aws-credentials, you can efficiently manage the deployment lifecycle while adhering to security best practices.

No more will you need to manually upload files via a browser, or via an SFTP client. This not only makes for a streamlined workflow, but also helps to keep your site secure.

Frequently asked questions

What steps should be taken to ensure the deployment process handles large files or directories efficiently?

To ensure the deployment process handles large files or directories efficiently, consider using the AWS CLI’s sync command instead of cp in the GitHub workflow. This method only uploads changed files, reducing the amount of data transferred. Additionally, enable multipart upload for large files to improve upload speed and reliability.

How can users integrate other AWS services, such as CloudFront, with the deployment process to enhance website performance?

Integrating CloudFront with the deployment process involves setting up a CloudFront distribution and invalidating the cache automatically post-deployment. Modify the GitHub workflow to include steps for invalidating CloudFront cache using AWS CLI commands after files are uploaded to the S3 bucket.

What are some common errors encountered during the deployment process and how can they be resolved?

Common errors during the deployment process include permission issues, incorrect S3 bucket configurations, and network-related problems. To resolve these, ensure the AWS credentials used in the GitHub workflow have appropriate permissions for the S3 bucket and CloudFront. Double-check the bucket’s configuration settings and ensure network stability during the deployment process.